Every organization feels the pressure to “do something with AI.” Employees are eager to adopt tools like Microsoft Copilot, ChatGPT, and Gemini, but CIOs face a starkly different reality: unmanaged AI usage is a growing security, compliance, and governance risk. The real challenge is not interest or enthusiasm, it is ensuring that AI adoption happens responsibly, securely, and with measurable business impact.

This article introduces a three-pillar strategy used by leading enterprises to move from AI-curious experimentation to secure, scalable, AI-enabled operations.

The Pressure Is On, and the Risks Are Real

Across every industry, employees are already using AI, often creatively and often unsafely.

Whether leadership approves it or not, AI is happening.

AI Shadow IT is now one of the fastest-growing security threats inside the enterprise.

Employees, under pressure to move faster, paste sensitive content into public AI tools that were never designed to handle organizational data confidentiality.

The result?

- Hidden data exfiltration

- Loss of IP and PII

- Compliance violations

- A rapidly expanding attack surface

This is why many organizations remain stuck in the assessment stage: the pressure to adopt AI is high, but the fear of doing it wrong is even higher.

The solution is not to block AI.

The solution is to govern it, secure it, and enable it, before it grows beyond your control.

The Three Pillars to Move from AI-Curious to AI-Enabled

Below is the enterprise-proven framework organizations use to adopt AI confidently and securely.

Pillar 1: Establish Trust, Govern Your Data Before You Scale AI

AI is only as secure and effective as the data it touches. Without a strong governance foundation, AI will amplify pre-existing weaknesses in your environment.

The Hidden Problem: Unstructured Data Is Your Blind Spot

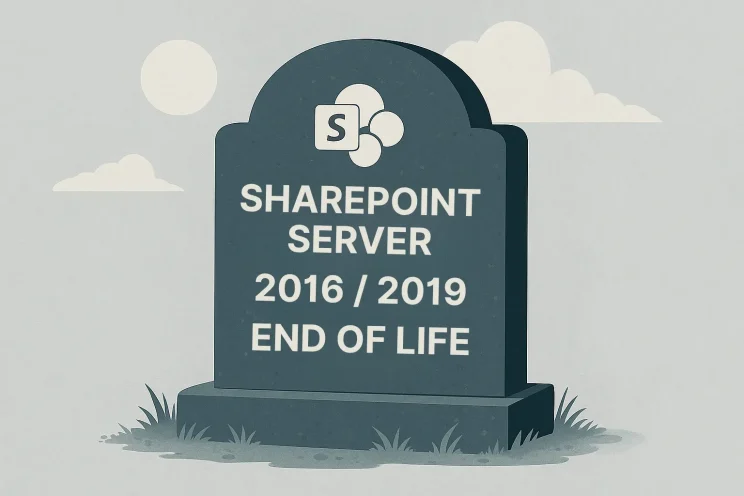

Most sensitive information lives in unstructured content, documents, emails, chats, and files scattered across SharePoint, Exchange, and Teams.

Industry analysts like Gartner and IDC estimate that 80 to 90 percent of enterprise data is unstructured.

This is the very data that grounding-based AI tools rely on.

And here is the critical issue:

AI does not retrieve what an employee should access, it retrieves what they can access.

Old permissions models, overshared sites, and years of “temporary access” create the single biggest risk for AI leakage.

The Solution: Establish an AI Data Perimeter

Implement enterprise-grade controls such as:

- Data Loss Prevention (DLP)

- Retention and disposal policies

- Sensitivity Labels / Information Protection

- Just-in-time and least-privilege access

These controls apply regardless of which AI tool your users adopt.

What to Do Now: Conduct an AI Impact Assessment

Instead of waiting until your environment is “fully cleaned up”, a process that could take years, leading organizations start with an AI Impact Assessment to:

- identify high-risk exposure points

- evaluate over-permissive access

- understand where sensitive data is already at risk

- prioritize governance investments

This becomes the entry point, the strategic blueprint to deploy foundational controls immediately.

Pillar 2: Strengthen Security, Make Compliance an Accelerator, Not a Roadblock

In the age of AI, compliance is not a checkbox. It is a critical enabler of scale.

Auditability and Lineage Are Non-Negotiable

Organizations must track:

- where data originates

- who interacts with it

- how it flows through systems

- how long it should exist

Proper governance ensures full traceability, which is essential for both accountability and regulatory readiness.

Ethical AI Requires Guardrails

Enterprises are accountable not only for data security, but also for the fairness and accuracy of AI outputs.

This means establishing governance that:

- ensures decisions are defensible

- reduces bias

- enforces permissions consistently

- prevents misinformation

- protects sensitive attributes

What to Do Now: Implement AI Risk Management

This is the execution phase, where organizations begin configuring a defensible governance baseline using technologies such as:

- Microsoft Purview

- Insider Risk Management

- Third-party governance platforms

This creates the secure posture needed to test and scale AI adoption safely.

Pillar 3: Strengthen Security, Make Compliance an Accelerator, Not a Roadblock

The greatest risk to AI adoption is not technology. It is people.

Most AI initiatives fail because employees:

- do not know how to use the tools safely

- fear job displacement

- lack clarity about how AI supports their role

- do not understand how to prompt effectively

To become AI-enabled, organizations must invest in the human side of AI.

Transparency Builds Trust

Successful leaders explain:

- what AI will change

- what AI will not change

- how it enhances productivity

- how existing roles will evolve

With transparency, resistance decreases and adoption accelerates.

The Art of Prompting Is a Core Skill

General training isn’t enough.

Employees need role-specific, secure AI usage that teaches:

- effective prompting

- grounding inputs safely

- validating outputs

- identifying hallucinations

- avoiding sensitive data exposure

Prompting is no longer a “nice to have”, it is a core digital skill.

What to Do Now: Make User Enablement a Priority

Once your AI Data Perimeter is in place, shift immediately to:

- targeted training sessions

- role-specific guidelines

- secure usage scenarios

- hands-on exercises

This is the adoption phase, where real business value emerges.

The Path Forward

Most organizations already know they want to use AI.

The uncertainty lies in how to adopt it securely and responsibly without stalling innovation.

At Bravo Consulting Group, we believe governance is not a barrier to adoption, it is the accelerator that unlocks responsible scale.

Our fixed-price, modular approach gives organizations:

- a clear strategic roadmap

- the foundation for secure AI

- hands-on implementation for controls

- tailored user adoption programs

- the confidence to move from experimentation to impact

If you’re ready to move from AI-Curious to AI-Enabled, we can help you turn your AI investments into measurable, secure business outcomes, starting today.

Take the Next Step

Take the next step with a brief strategy conversation designed to clarify your risks, opportunities, and your path to responsible AI adoption.

📅 Book a Strategy Conversation

📩 Prefer to reach out in writing? Submit the Contact Form.

You can also contact us directly at info@bravocg.com